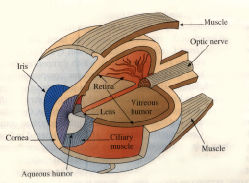

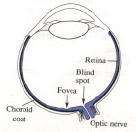

The mammalian eye is a complicated structure, but it is not difficult to understand how it produces images, at least to first approximation. The human eye is fairly typical of mammahan eyes and is shown below. Light enters through the transparent cornea, whose form is approximately spherical. The light then passes through a chamber containing clear liquid called the aqueous humor. At the rear of the chamber is a muscular, ring-shaped structure called the iris. (It is the pigmented membranous cover of the iris that determines eye color.) The circular aperture in the ring is called the pupil. By contracting and relaxing under control of the autonomic nervous system, the iris regulates the diameter of the pupil and thus the amount of light passing farther into the eye.

Just behind the iris is the crystalline lens, an elastic,

transparent capsule. The lens is mounted in the ciliury muscle.

This muscle can squeeze on the lens, making it bulge and thus

reducing its focal length. Behind the lens is a chamber filled with a

clear, jellylike fluid called the vitreous humor. At the rear

of the chamber is a highly complex, light-sensitive structure called

the retina. The retracting structures of the eye_the cornea

and the lens_cast an image on the retina. The retina converts light

into electrical signals that are transmitted and partially processed

by the optic nerve. The final processing and interpretation

takes place in the brain.

Most visual sensations have their point of origin in some external (distal) object. Occasionally, this object will be a light source that emits light in its own right; examples (in rather drastically descending order of emission energy) are the sun, an electric light bulb, and a glow worm. All other objects can only give off light if some light source illuminates them. They will then reelect some portion of the light cast upon them while absorbing the rest.

The stimulus energy we call light comes from the relatively small band of radiations to which our visual system is sensitive. These radiations travel in a wave form that is somewhat analogous to the pressure waves that are the stimulus for hearing. This radiation can vary in its intensity, the amount of radiant energy per unit of time, which is a major determinate of perceived brightness (as in two bulbs of different wattage). It can also vary in wavelength, the distance between the crests of two successive waves, which is a major determinate of perceived color. The light we ordinarily encounter is made up of a mixture of different wavelengths. The range of wavelengths to which our visual system can respond is the visible spectrum, extending from roughly 400 ("violet") to about 750 ("red") nanometers (1 nanometer = 1 millionth of a millimeter) between successive crests.

The next stop in the journey from stimulus to visual sensation is the eye. Except for the retina, none of its major structures has anything to do with the transduction of the physical stimulus energy into neurological terms. Theirs is a prior function: to fashion a proper proximal stimulus for vision, a sharp retinal image, out of the light that enters from outside.

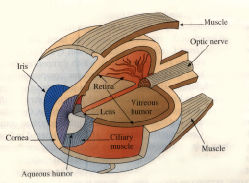

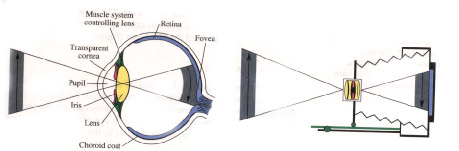

Let us briefly consider how this task is accomplished.. The eye has often been compared to a camera, and in its essentials this analogy holds up well enough (see below). Both eye and camera have a lens, which suitably bends (or refracts) light rays passing through it and thus projects an image upon a light-sensitve surface

Eye and Camera

As an accessory apparatus for fashioning a sharp image out of the light that enters from outside, the eye has many similarities to the camera. Both have a lens for bending light rays to project an inverted image upon a light-sensitive surface at the back. In the eye a transparent outer layer, the cornea, participates in this light-bending. The light-sensitive surface in the eye is the retina, whose most sensitive region is the fovea. Both eye and camera have a focusing decide in the eye, the lens can be thickened or flattened. Both have an adjustable iris diaphragm. And both finally are encased in black to minimize the effects of stray light; in the eye this is done by a layer of darkly pigmented tissue, the choroid coat.

behind&emdash;the film in the camera, the retina in the eye. (In the eye, refraction is accomplished by both the lens and the cornea, the eye's transparent outer coating Both have a focusing mechanism: In the eye this is accomplished by a set of muscles that changes the shape of the lens; it is flattened for objects at a distance and thickened for objects closer by, a process technically known as accommodation. Finally, both camera and eye have a diaphragm that governs the amount of entering light In the eye this function is performed by the iris, a smooth, circular muscle that surrounds the pupillary opening and that contracts or dilates under reflex control when the amount of illumination increases or decreases substantially

The image of an object that falls upon the retina is determined by simple optical geometry Its size will be inversely proportional to the distance of the object, while its shape will depend on its orientation. Thus, a rectangle viewed at a slant will project as a trapezoid.

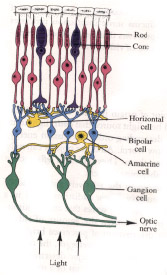

The retina:

There are three main retinal layers: the rods and cones, which are the photoreceptors; the bipolar

cells; and the,ganglion cells, whose axons make up the optic nerve.

There are also two other kinds of cells, horizontal cells and

amacrine cells, that allow for sideways (lateral) interaction As

shown in the diagram, the retina contains an anatomical oddity. As it

is constructed, the photoreceptors are at the very back, the bipolar

cells are in between, and the ganglion cells are at the top. As a

result, light has to pass through the other layers (they are not

opaque so this is possible) to reach the rods and cone, whose

stimulation starts the visual process.

Fovea and blind spot

The fovea is the region on the retina in which the receptors

are most densely packed. The blind spot is a region where there are

no receptors at all, this being the point where the optic nerve

leaves the eyeball.

Quite apart from the question of what an object is (which we'll take up later), we have to know where it is located. The object may be a potential mate ore saber-toothed tiger, but we can hardly take appropriate action unless we can also locate the object in the external world.

Much of the work on the problem of visual localization has concentrated on the perception of depth, a topic that has occupied philosophers and scientists for over three hundred years. They have asked: How can we possibly see the world in three dimensions when only two of these dimensions are given in the image that falls upon the eye? This question has led to a search for depth cues, features of the stimulus situation that indicate how far the object is from the observer or from other objects in the world.

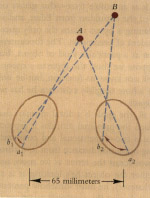

A very important cue for depth comes from the fact that we have two eyes. These look out on the world from two different positions. As a result, they obtain a somewhat different view of any solid object they converge on. This binocular disparity inevitably follows from the geometry of the physical situation The disparity becomes less and less pronounced the farther the object is from the observer. Beyond thirty feet the two eyes receive virtually the same image (Figure 6.1).

Binocular disparity alone can induce perceived depth. If we draw or photograph the two different views received by each eye while looking at a nearby object and then separately present each of these views to the appropriate eye, we can obtain a striking impression of depth.

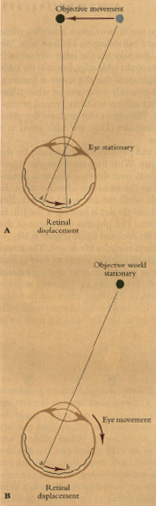

Thus far we have encountered situations in which both the observer and the scene observed are stationary. But in real life we are constantly moving through the world we perceive. This motion provides a vital source of visual information about the spatial arrangement of the objects around us, a pattern of cues that once again follows from the optical geometry of the situation.

As we move our heads or bodies from right to left, the images projected by the objects outside will necessarily move across the retina. This motion of the retinal images is an enormously effective depth cue, called motion parallax. As we move through space, nearby objects appear to move very quickly in a direction opposite to our own; as an example, consider the trees racing backward as one looks out of a speeding train. Objects farther away also seem to move in the opposite direction, but at a lesser velocity.

According to James Gibson, such movement-produced changes in the optic image are a major avenue through which we obtain perceptual information about depth relations in the world around us. Motion parallax is just one example of the perceptual role played by these changes. Another is the optic flow that occurs when we move towards or away from objects in the world. As we approach them, they get larger and larger; as we move away from them, they get smaller. Figure 6 8A shows the flow pattern for a person looking out of the back of a train; Figure 6.8B shows the flow pattern for a pilot landing an airplane.

INNATE FACTORS IN DEPTH PERCEPTION

In many organisms, important features of the perception of space

are apparently built into the nervous machinery. An example is the

localization of sounds in space. One investigator studied this

phenomenon in a ten minute baby The newborn consistently turned his

eyes in the direction of a clicking sound, thus demonstrating that

some spatial coordination between eye and ear exists prior to

learning .

|

| ||

BACK |

|

NEXT |